Collaborative robots as a remedy for the shortage of skilled workers – how can this work in industry and trade? At the COBOTS4YOU trade fair at the Vogel Convention Center in Würzburg, exhibitors presented their solutions to this question. Prof. Dr. Tobias Kaupp from the Center for Robotics (CERI) at the Würzburg-Schweinfurt University of Applied Sciences (THWS) gave the keynote address and presented the latest research results on how to make the collaboration between humans and cobots (collaborative robots) safer.

Particularly in the automotive industry, industrial robots are a familiar sight: fully automated robotic arms are part of the mass production plant, but no human comes anywhere near them. We can only speak of collaboration when humans and robots work together on a task. But even this usually happens with robots in an enclosure or behind a protective fence. Alternatively, humans and robots may only be in their respective assigned areas.

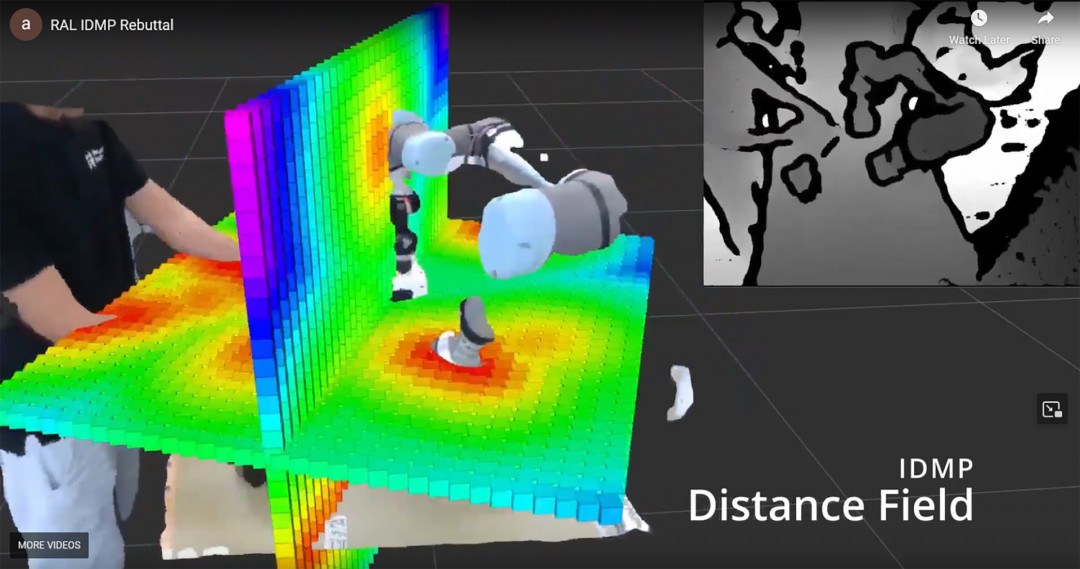

The concept of using robots as a ‘third hand’ for humans, for example, multiplies the number of possible applications, but the necessary physical proximity means a significantly greater risk of accidents, according to Prof. Dr. Kaupp. He explained the conflict between productivity and safety, which is usually solved by making robots move slowly, but this reduces productivity. Non-contact safety technology is also needed in a highly dynamic environment – that is, humans and robots do not get in each other's way, even though they have to move quickly to perform their tasks. To achieve this, a team of CERI researchers and scientists from the University of Technology, Sydney (Australia) jointly developed a real-time, granular workspace monitoring system: ‘The robot knows the distance and direction of the nearest object for every point in the workspace,’ explained Prof. Kaupp. With this information, the robot is able to use reactive motion planning and can thus react to unforeseen events in good time.

As an example, Prof. Kaupp showed a film sequence in which a ball rolls past the robot. Previous software solutions have not been able to adequately display this dynamic scene – in contrast, the software developed by the German-Australian research team can map this situation in a way that is true to reality, enabling the cobot to react with an evasive movement, for example. Depth cameras or LiDAR sensors, of which several can be used simultaneously, serve as sensors for workspace monitoring. Another advantage of the developed solution is that CPU computing power is sufficient – so a supercomputer is not required for real-time display.

In addition to the keynote, a team of doctoral students and scientific and technical staff from CERI presented their current research projects. In addition, CERI members and professors from the Center for Artificial Intelligence (CAIRO) at THWS discussed potential cooperation opportunities with industry visitors.